Concept

The beginning of all games we see is a simple concept or idea. the concept is just a simple idea for what the game can be about. For instance, a simple game concept could be to make a futuristic 3D shoot em’ up game to fit into the currently violent society. It can also be something as simple as making an action/adventure game where you’re controlling a pirate.

The game’s idea can also start as simply wanting to make a follow-up or sequel to an existing title, a game based on an existing non-gaming character, stories or franchises, for example a star wars game, although George Lucas’s permission would be required (see my post on George Lucas) – or a game that’s meant to simulate some real world experience, such as the case with sports, flight, or driving simulations. In these cases, the beginning of the game’s development can simply be the company deciding that it wants to make a game that simulates the real-life sport of motor racing or one that’s based on the television series Lost.

Pre-Production

The next step that needs to be done in the game development process is mostly referred to as the pre-production phase. This is where a pre-production team, which usually includes a unset number of producers, designers, programmers, artists and writers, will work on things such as writing the storyline, creating storyboards, and putting together a design document detailing the game’s goals, level designs, game play mechanics and overall blueprint.

The freedom that the pre-production team has in each of these areas is limited to the type of game being made. When a game’s being created on a completely original idea, the story writers, artists and designers can make whatever they imagine with no limit except for the hardware’s limitations. The story and characters are only limited by the imaginations of the people on the pre-production team.

In instances where the game being developed is based on a licensed franchise or a simulation of a real world event, the freedom is often limited to what’s allowed within the the franchise or real world event in question. If a company is working on a game based on a Pixar license, there’ll often be restrictions with what the characters can do or say or where the storyline can go. There’ll also usually be guidelines that stipulate precisely what the characters in the game must look like.

Likewise, if a simulation of football is being developed the designers have to mimic the real-life rules and regulations of the sport. While new characters, teams, and rules may be added, if it’s an F.A-licensed football simulation being developed it will have to have a foundation based on the real-life players, teams, rules, regulations and look of the F.A.

Next is the storyline (if the game requires one). The storyline is a hugely important process as it defines the main characters, plot, setting and overall theme. It can be as simple as coming up with the names of characters that are entering a racing tournament or it can be much bigger where there are hundreds of words of spoken dialogue like the Grand Theft Auto Games. Of course, if what’s being worked on is a simple simulation of backgammon and use of characters or a plot isn’t being planned, then this step is ignored.

Once the storyline is completed, the next step is to attempt to piece together a storyboard for the game. This is a visual representation of storyline that includes sketches, concept art, and text to explain what happens in each scene of the game. The storyboards are mostly done for the cinematic CG rendered or realtime cut-scenes, but may be used in general game play.

The third aspect of the pre-production phase, which is done alongside the writing of the story and the crafting of the storyboards, is the piecing together of a design document for the game. In addition to including the storyline and storyboards, the design document will also show the designers overall blue print for exactly how the game will be played, what each menu or screen in the game will look like, what the controls for the character or characters are, what the game’s goal is, and the rules for how you win/lose in the game, and maps of the different worlds or levels within the game.

This is where the designers, as well as the software engineers, must decide things such as what happens on screen when a specific button or key. But mostly time is spent on things such as what exactly is in each world, what can and cannot be interacted with and how a NPC (non-player controlled) character reacts to what the player-controlled character does in the game.

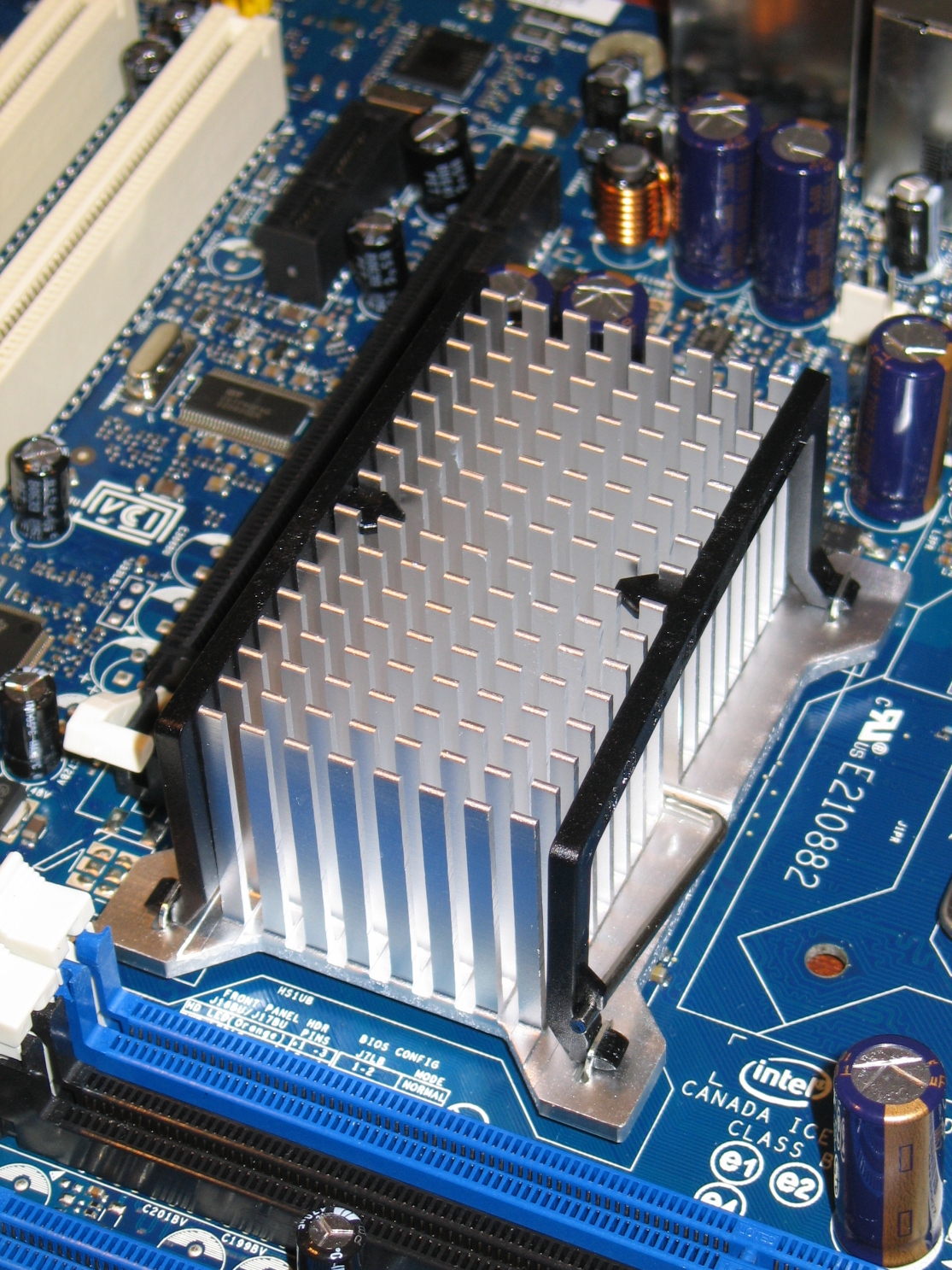

The parties involved must also take into consideration the limitations of the platform that the game is being created on and, in the case of consoles, what standards that the hardware manufacturer may require to be followed in order to be approved for release on the system.

Production

After pre-production is complete and the game’s overall blueprint has been finalized, the development of the game enters the production phase and now larger group of producers, designers, artists and programmers are brought into the mix.

The producer(s) will work with the design, art and programming teams to make sure everyone is working together. The main job for them is to create schedules to be followed by the engineers and artists, making sure the schedules are stuck to, and to ensure that the goals of the design are followed throughout the development of the game. Those in production will also work with dealing with any licenses that the game uses and in making sure the company’s marketing department knows what it needs to know about the title.

The artists during the production phase will be working on building all of the animations and art which you’ll see in the game. Programs such as Maya and 3D Studio Max will often be used to model all of the game’s environments, objects, characters and menus – essentially everything. The art team will take care of creating all of the texture maps that are added to the 3D objects to give them more life.

At this time, the programmers are working on coding the game’s library, engine, and artificial intelligence (AI). The library is usually something that has already been created for the company for use with all its games and is constantly updated and tweaked in order to meet any new goals or expectations for the development of newer titles. Many times the library team will be required to write its own custom programming code.

Post-Production

The final stage of a game’s development is the post-production stage. This begins when the game is “feature complete” in that all of the code has been written and art has been completed but there may be problems. This is when an alpha version of the game is created and is supplied to the game’s test department to find bugs and major flaws in the game that need to be changed whether by the artists or programmers.

Once all of the bugs and major flaws are identified and addressed, a beta version of the game is then produced and once again sent to the test department to be tested. This is where the hardcore testing is done and every single bug regardless of how major or minor is documented and attempted to be fixed.

All that’s left to do once the game is approved by the console manufacturer or just finished by the developer in the case of PC games, is for the game to be manufactured and then distributed to stores where you can go out and buy them.